Nebulaworks Insight Content Card Background - David pisnoy metal water texture

Recent Updates

By now, you most certainly have heard there is a new virtualization tool on the block. And this guy seemed to move into that quiet, well established neighborhood populated with virtual machines and instances and is creating quite a stir. Like a non-stop house party for the past six months.

Since we are focused on delivering apps in efficient means and helping companies integrated CI/D with these tools we are always talking containers. Fast forward to last week when I was having lunch with a non-engineer friend of mine who works in the tech industry. Shockingly (at least to me) he had not heard of containers. And on a weekly basis we meet with folks that “have heard of it” but do not have a good handle on the details. Figured now is better than ever to shed some light on container tech. This is meant to be a primer, and accomplish the following:

- What are containers?

- Various container tech available for use

- How we see containers being used today

Let’s get started.

What are containers?

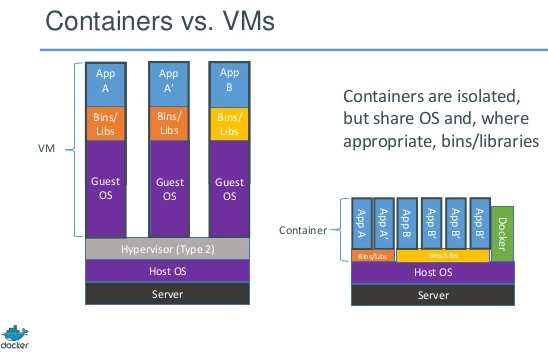

Back to that quiet street. It is populated by virtual machines (or instances for the *Stack and AWS/GCE crowd), the concept having been around for a long while. To review, a virtual machine is a construct which abstracts hardware. By implementing a hypervisor, one takes a single computer, with CPU, memory, and disk, and divides it to allow a user to run a number of virtual computers (machines). This was created largely to drive up the utilization of physical machines making data centers more efficient and allowing more workloads to run on the same amount of physical compute hardware. And it works brilliantly. The key here, is many operating systems per physical machine - which means many virtual machines to patch and manage as if they were single computers. Each virtual machine has its own virtual hard drive and it’s own operating system. But with this comes a ton of excess baggage. All of those VMs likely have the same copies of binaries, libraries, and configuration files. And, since we are booting multiple kernels on a physical machine, we add a ton of load to essentially run applications.

Enter the party-animal, the container. Rather than providing a whole operating system, the container technology virtualizes an operating system. Instead of abstracting hardware, container tech abstracts the resources provided by an operating system. By doing so each container appears to a user or application like a single OS and kernel. It isn’t though. There is only one underlying kernel with all of the other containers sharing time. This solves for a different problem. Not driving up compute utilization (it certainly can) but instead addressing portability and application development and delivery challenges. Remember all of that baggage with a VM? To move it around, you have to bring it along. And the format of the VM may not be supported on a different virtualization tech, requiring it to be converted. Conversely, with a container - so long as a supported Linux version is installed - you build your app, ship the container, and run that code anywhere. The container running on your laptop with your code can run, unmodified, on Amazon EC2, Google Compute, or a bare metal server. This is very powerful. Not to mentioned that creating orchestrated and automated provisioning of applications based on containers, at scale, is much easier than with VMs. So much so that most large internet companies are already using containers (Google, Netflix, Twitter) and all platform as a service (PaaS) tools use a container technology for launching application workloads.

What are the container technologies available today?

There are a handful of container technologies available in the wild for use today. Over the past year, most everyone has become familiar with Docker, but in reality there are others which predate Docker by a large margin. I’ll describe a few of them, some of the details around the technology, and their popularity.

Solaris Zones

Solaris has had container technology as part of the operating system, dating back to 2005. There has been some confusion when referring to Solaris containers, as early on a container was a Solaris Zone, combined with resource management. Over time, the container part has been dropped, and now are exclusively referred to as Solaris Zones.

As with other container technology, Solaris Zones virtualize an operating system, in this case Solaris x86 or SPARC. There are two types of zone; global and non-global. Global zones differ from non-global in that they have direct access to the underlying OS and kernel, and full permissions. Conversely, non-global zones are the virtualized operating systems. Each of those, can be a sparse zone (files shared from the global zone) or a whole root zone (individual files copied from the global zone). In either case, storage use is not large due to the use of ZFS and copy on write technology. Up to 8191 non-global zones can be configured per global zone.

Solaris zones are quite popular with system administrators using Solaris systems. They provide a mechanism to drive higher utilization rates, while providing process isolation. They are not portable outside of Solaris environments, so running Solaris workloads on public cloud providers is not an option.

Docker

Docker is a relative newcomer to the container scene. Originally developed as a functional part of the dotCloud Platform as a Service offering, Docker was developed to enable dotCloud to launch workloads in their PaaS. When dotCloud realized that they had a core technology on their hands which was valuable outside of their own PaaS. As such, in 2013 the company pivoted, created Docker, Inc., and began to focus solely on the open source Docker project.

Docker is comprised of two major components. The Docker engine, which is a daemon residing on a Linux operating system to manage containers, resources (CPU, memory, networking, etc.) and expose controls via an API. The second component of the Docker stack, the Docker container itself, is the unit of work and portability. It is comprised of a layered filesystem which includes a base image and instructions on how to install or launch an application. What it does not have, are all of the bits which make up a whole OS. When launching a Docker container, a user can specify a configuration file (DockerFile). This file can include the following: A base image is which installs only the necessary OS bits, specific information related to how an application should be installed/launched and specifics on how the container should be configured.

The key component to the rapid adoption of Docker is portability. The basis of this is the Docker-developed open source library, libcontainer. Through this library, virtually any Linux operating system, public, or private cloud provider can run a Docker container - unmodified. Code that was developed on a laptop and placed into a container will work without changes on, for example, Amazon Web Services. Libcontainer was the first standardized way to package up and launch workloads in an isolated fashion on Linux.

Rocket

In response to Docker, including a number of perceived issues in the libcontainer implementation, CoreOS went to work on an alternative Linux-based container implementation. The CoreOS implementation also uses constructs to instantiate applications; isolating processes and managing resources. Rocket as a project is focused on providing a container runtime that is composable, secure, distributed in nature, and open. To support this, their initial specification and development effort - Rocket - is focused on providing the following.

- Rocket: A command line tool for launching and managing containers

- App Container Spec (appc):

- App Container Image: A signed/encrypted tgz that includes all the bits to run an app container

- App Container Runtime: The environment the running app container should be given

- App Container Discovery: A federated protocol for finding and downloading an app container image

The Rocket project prototype was announced in December, 2014. Development has been swift, with new features and functionality added regularly.

How we see containers being used today

Container adoption is something that we have seen evolve very rapidly. In a very short period of time, we have seen a number of companies begin to adopt container deployment. Most of the early adopters are still utilizing the technology for development, providing an easy way for developers to write and deploy code to test/QA while still providing an deployment artifact which operations can peer into - confirming the details of what is being deployed. Our container of choice for these initiatives is Docker, having a well developed ecosystem of official software and tool images.

In addition to development efforts, we have worked with organizations to implement container technology for testing purposes. Containers are very well suited for this type of workloads. Containers provide a mechanism to to instantiate a number of containers with a given application, including prerequisites services, which is very fast, utilizes few resources, and leaves little behind when testing is complete. In addition, most CI/D platforms have hooks which are easily extended to use containers for testing purposes. This includes Jenkins and Shippable. For many companies, this is as far as they are comfortable taking integrating containers into their workflows.

There are a handful of organizations who are embracing DevOps and see a value in placing containers into production, today. We find these organizations very interesting and exciting to work with. As a whole, attempting to place containers into production requires solving a number of critical issues, namely cross-host networking, managing persistent data, and service discovery. The good news is that these are being addressed rapidly not only by the container developers but also by other technology providers. By utilizing our Application Delivery methodology, we are able to identify services which are candidates for containerization and production deployment and provide the appropriate architecture and processes to migrate or deploy these into production. We also employ the same methodology to define roadmaps for micro services-based application development and delivery. Out of the companies which we are working with on container deployment, we have mainly utilized Docker and the emerging platform tools. The ecosystem of operations tools as well as continuous delivery integration supporting Docker is more mature than other container technologies and for enterprise deployments this is an important consideration.

In summary, I hope that this has provided some insight into containers, the players, and how they can be used. There is clearly a ton of work to be done in with the technology, but for now if you or your company is willing to embrace them you just may see time savings, increased productivity, reduced development and delivery timelines, and production deployment flexibility.

Looking for a partner with engineering prowess? We got you.

Learn how we've helped companies like yours.